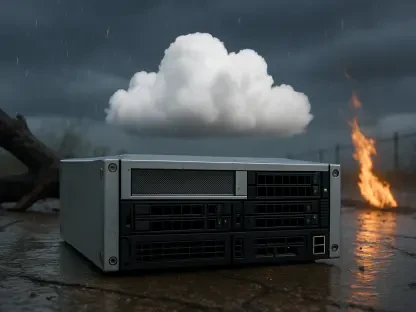

The seamless integration of artificial intelligence into daily life has long been hampered by its fundamental reliance on distant, powerful cloud servers, creating a noticeable lag between a user’s command and the resulting action. For years, this architecture has been the standard, but it has introduced significant challenges related to network latency, the high operational costs of maintaining massive data centers, and growing concerns over how personal data is transmitted and stored. In response to these pressing issues, a significant technological pivot is underway, shifting the center of AI processing from the cloud directly to the user’s device. This move toward edge AI marks a transformative trend aimed at making artificial intelligence more efficient, responsive, and secure. A key innovation in this space, FunctionGemma, exemplifies this new direction by enabling devices to understand and execute commands locally, heralding a new era of on-device intelligence that operates with unprecedented speed and a renewed respect for user privacy.

A New Paradigm in AI Execution

Redefining AI Interaction on Mobile Devices

FunctionGemma fundamentally alters the relationship between users and their devices by moving away from the generalized, conversational models that dominate the cloud. Instead of generating prose or engaging in open-ended dialogue, this specialized model is engineered for a single, critical purpose: translating natural language commands into precise, executable instructions that a device’s operating system can immediately understand and act upon. When a user issues a command, the entire translation process occurs directly on the device, eliminating the round-trip journey data previously had to make to an external server. This on-device execution delivers a nearly instantaneous response, removing the frustrating delay that often accompanies cloud-based AI interactions. Furthermore, because the processing is local, these functions can be performed without a constant network connection, making AI-powered features more reliable and accessible in areas with poor or no connectivity. This shift represents a transition from a passive request-and-wait model to a dynamic and immediate command-and-execute system, creating a more fluid and intuitive user experience.

Fortifying User Data with Local Processing

In an age of heightened public and regulatory scrutiny over data handling, the move to on-device processing offers a powerful solution to prevailing privacy concerns. FunctionGemma’s architecture ensures that user data remains on the device at all times, as all command interpretations are handled locally. This design choice directly addresses the core issue of data privacy by preventing personal information, voice recordings, and other sensitive inputs from being transmitted to and stored on company servers. By keeping the entire operation within the confines of the user’s personal hardware, the model drastically reduces the potential for data misuse or unauthorized access. This local-first approach aligns perfectly with the growing demand for greater user control and transparency over personal information. It allows technology companies to offer advanced AI functionalities without compromising user trust, providing a robust framework that respects privacy boundaries by design rather than by policy alone. This inherent security is a cornerstone of the on-device AI movement, offering a tangible assurance that personal data remains private.

Strategic Implications and Future Outlook

Architecting a Hybrid AI Ecosystem

The introduction of on-device solutions like FunctionGemma does not signal the end of cloud-based AI but rather the beginning of a more sophisticated and efficient hybrid model. This dual approach intelligently balances local and cloud resources to optimize performance across a wide range of tasks. Simpler, routine commands and frequently used functions are managed efficiently on the device itself, which enhances the user experience by providing immediate feedback and reduces the constant demand on cloud infrastructure. Simultaneously, more complex and computationally intensive operations, such as training large models or processing massive datasets, can still be offloaded to Google’s powerful cloud servers. This nuanced strategy provides significant commercial benefits, allowing both Google and third-party developers to mitigate the high, often unpredictable costs associated with running sophisticated AI models exclusively in the cloud. For consumers, this translates to faster, more reliable AI features that do not come with the trade-offs of increased latency or compromised privacy, creating a more balanced and sustainable technological ecosystem.

A New Standard for Responsive Applications

The deployment of FunctionGemma marked a critical step in a broader industry trend toward developing more responsive, reliable, and privacy-conscious AI applications. Its focus on executing specific tasks at the device level illustrated a strategic pivot that created a more balanced and sustainable ecosystem for artificial intelligence. By emphasizing local processing, the industry effectively found a way to achieve a crucial equilibrium between delivering advanced technological capabilities and upholding essential user assurances, including speed, accuracy, and robust data security. This approach demonstrated a sophisticated understanding of the evolving needs of the digital landscape, where performance and privacy are no longer seen as mutually exclusive. The successful implementation of this hybrid model, blending the immediacy of on-device AI with the power of the cloud, set a new benchmark for how future intelligent systems should be designed and integrated into everyday technology.