In an era where Artificial Intelligence (AI) is reshaping everything from everyday communication to complex industrial processes, the unsung heroes behind this transformation are semiconductors—those tiny silicon chips that power the sprawling data centers at the heart of AI innovation. These facilities, often hidden from public view, process staggering volumes of data to train models that can generate human-like text, recognize images, or even guide autonomous vehicles. Without the relentless advancements in semiconductor technology, the explosive growth of AI, particularly in generative models and large language models (LLMs), would grind to a halt. The relationship between AI and semiconductors has sparked what industry insiders term a “silicon supercycle,” a period of unprecedented expansion that is redefining the technological landscape. This surge is not just about faster chips; it’s about enabling a future where AI becomes seamlessly integrated into daily life. However, with great progress come significant challenges, including energy consumption, supply chain vulnerabilities, and geopolitical tensions. Delving into this dynamic interplay reveals how semiconductors are not merely supporting AI but actively shaping its trajectory across industries and societies.

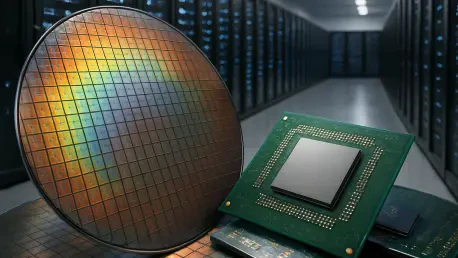

Technological Innovations in Semiconductors

Pioneering Chip Designs for AI Workloads

The rapid evolution of AI demands semiconductor designs that can keep pace with increasingly complex computational needs, and industry leaders are stepping up with groundbreaking solutions. Specialized chips like NVIDIA’s Blackwell GPUs and Google’s Tensor Processing Units (TPUs) are engineered to excel in parallel processing, a critical requirement for training massive AI models that handle billions of parameters. These designs prioritize high-speed data handling, ensuring that vast datasets can be processed efficiently. By addressing longstanding bottlenecks such as the “memory wall”—where data transfer speeds lag behind processing power—these innovations enable data centers to perform at levels previously unimaginable. This leap forward is essential for applications ranging from natural language processing to real-time image recognition, where split-second decisions are paramount.

Beyond the headline-grabbing GPUs and TPUs, advancements in memory technology are equally transformative for AI data centers, shaping the future of high-performance computing. High-Bandwidth Memory (HBM3 and HBM3E) offers a dramatic increase in data throughput, providing the bandwidth necessary to feed enormous datasets into AI accelerators without delay. This isn’t just a minor upgrade; it’s a fundamental shift that reduces processing times and enhances energy efficiency, making large-scale AI deployment more economically viable. As data centers scale to meet global demand, these memory solutions ensure that the infrastructure can support the next generation of AI applications, from personalized virtual assistants to sophisticated predictive analytics in healthcare and finance.

Overcoming Computational Challenges

AI’s insatiable appetite for computational power requires more than just faster chips; it demands smarter architectures that optimize every aspect of data processing. Technologies like NVIDIA’s NVLink and Compute Express Link (CXL) are designed to minimize data movement within systems, a notorious drag on performance that can slow down even the most powerful processors. By streamlining communication between components, these innovations ensure that AI models can be trained and deployed with greater speed and precision. This is particularly crucial for real-time inference tasks, where delays can undermine user experience or critical decision-making in fields like autonomous driving.

Additionally, architectural breakthroughs such as dual-GPU chiplet designs are pushing the boundaries of what data centers can achieve. These configurations amplify processing capabilities by allowing multiple units to work in tandem, effectively doubling the computational power available for AI workloads. Such advancements mean that even the most demanding tasks—think training a language model with trillions of parameters—can be managed without overloading systems. As a result, data centers equipped with these cutting-edge semiconductors are better positioned to handle the escalating demands of modern AI, ensuring that innovation in software is matched by equally robust hardware solutions.

Industry Dynamics and Competition

The AI Arms Race Among Chipmakers

The semiconductor industry finds itself in the midst of a high-stakes “AI arms race,” where dominance in AI hardware translates to significant market power and influence. NVIDIA currently leads the pack, commanding over 80% of the AI GPU market, largely due to its robust CUDA software ecosystem that simplifies development for AI applications. This stronghold has made NVIDIA a cornerstone of AI data center infrastructure, powering everything from academic research to commercial deployments. However, this dominance also fuels intense competition, as other players recognize the strategic importance of capturing a share of this rapidly growing sector.

Challenging NVIDIA’s reign are competitors like AMD with its MI300 series and Intel with Gaudi 3, both of which are making inroads through a combination of innovative design and competitive pricing. Meanwhile, major cloud providers such as Google, Amazon, and Microsoft are not content to rely on external suppliers; they are investing heavily in custom silicon like TPUs, Trainium, and Inferentia to tailor hardware specifically for their AI workloads. This push for independence reflects a broader trend of specialization and cost optimization, driving a wave of innovation that ensures the semiconductor landscape remains dynamic and fiercely contested, ultimately benefiting end users with better, faster technology.

Role of Foundries and Memory Suppliers

Behind the flashy headlines of GPU makers, foundries like TSMC and Samsung play an indispensable role in the AI boom by manufacturing chips at cutting-edge process nodes such as 3nm and 2nm. These advanced fabrication techniques are vital for producing semiconductors that pack more power into smaller footprints, a necessity for the compact, high-density environments of modern data centers. Without the precision and scale of these foundries, the ambitious designs of chipmakers would remain theoretical, underscoring their quiet but critical contribution to AI’s growth.

Equally important are memory suppliers like Micron and SK Hynix, who are grappling with skyrocketing demand for High-Bandwidth Memory (HBM) essential for AI accelerators. Supply shortages in this area have become a bottleneck, highlighting HBM’s strategic significance in sustaining the pace of AI development. These suppliers face immense pressure to ramp up production while maintaining quality, as any disruption can ripple through the entire data center ecosystem. Their role, though often overlooked, is a linchpin in ensuring that the hardware foundation for AI remains robust, even as market demands and technological complexity continue to escalate.

Economic and Societal Impacts

The Silicon Supercycle and Economic Growth

Economically, the synergy between AI and semiconductors is creating a tidal wave of growth, often referred to as the “silicon supercycle,” with profound implications for global markets. Industry forecasts suggest an 18% revenue increase for the semiconductor sector in the current year, driven largely by AI-related demand. By 2030, nearly half of the industry’s capital expenditure is expected to be tied to AI, reflecting a cycle of investment that fuels further innovation. This economic momentum not only boosts established companies but also reshapes entire supply chains, creating ripple effects that touch industries far beyond technology, from manufacturing to logistics.

However, this boom also introduces stark disparities within the sector. While major players with deep pockets can pour resources into research and development, smaller firms and startups often struggle with the prohibitive costs of entry, particularly in accessing advanced chip designs. Cloud-based tools offer some relief by democratizing access to computational resources, but the gap between the haves and have-nots remains wide. As data center investments are projected to reach $1 trillion by 2028, the economic stakes of this supercycle become clear, influencing job creation, infrastructure development, and even national competitiveness on a global stage.

Societal Challenges and Environmental Concerns

The societal implications of the AI data center boom, powered by semiconductors, are as significant as the economic ones, often presenting a double-edged sword. On one hand, AI promises transformative benefits, enhancing everything from medical diagnostics to urban planning through data-driven insights. On the other hand, the infrastructure supporting these advancements—data centers—poses serious environmental challenges. Projections indicate that global power consumption by data centers could nearly double to 945 TWh by 2030, driven by AI servers that consume significantly more energy than traditional setups, straining grids and amplifying carbon emissions.

Addressing this energy crisis requires urgent innovation in semiconductor design and data center operations to ensure sustainable growth in technology. Energy-efficient chips that maximize performance per watt are becoming a priority, alongside advanced cooling technologies like direct-to-chip liquid cooling that reduce the thermal load. Additionally, AI-optimized software can help manage power usage more effectively, ensuring that resources are allocated only where needed. These solutions are not just technical fixes; they are essential for aligning the growth of AI with sustainable practices, ensuring that societal benefits do not come at an unsustainable cost to the environment.

Global Challenges and Vulnerabilities

Supply Chain Risks and Geopolitical Tensions

The semiconductor industry, despite its technological prowess, rests on a fragile foundation due to the concentrated nature of its supply chain, posing significant risks to the AI data center boom. A substantial portion of advanced chip production is localized in Taiwan and South Korea, making the sector vulnerable to disruptions from natural disasters, political instability, or economic shocks in these regions. Such geographic dependency creates a domino effect; a single event can halt production, delay AI deployments, and ripple through global tech markets, underscoring the precarious balance of this critical industry.

Geopolitical tensions further exacerbate these vulnerabilities, particularly with trade restrictions between major powers like the US and China limiting access to cutting-edge semiconductors. These policies, often driven by national security concerns, have turned chips into strategic assets, complicating international supply chains. As a result, many nations are reevaluating their reliance on foreign manufacturing, with potential delays in AI infrastructure growth if access to essential components is curtailed. This intersection of technology and politics highlights how semiconductors are not just tools of innovation but also pawns in broader global power dynamics.

Addressing Global Instabilities

Mitigating the risks tied to supply chain concentration is a complex but pressing priority for sustaining the AI data center boom, and governments and corporations are increasingly investing in domestic manufacturing capabilities to reduce dependence on a handful of global hubs. Initiatives to build local fabrication plants aim to create a buffer against international disruptions, though these efforts require substantial time, capital, and expertise to reach the scale of established foundries. Such moves are not just about resilience; they also reshape economic priorities, channeling resources into tech independence.

International collaboration offers another path to stability, though it comes with its own set of challenges. By fostering partnerships, countries can share resources and expertise to diversify supply chains, ensuring that no single region holds disproportionate control over semiconductor production. However, aligning diverse national interests and navigating trade barriers remain significant hurdles. These strategies, while imperfect, are critical steps toward safeguarding the global infrastructure that powers AI, ensuring that data centers can continue to expand without being derailed by unforeseen geopolitical or environmental crises.

Future Outlook for AI and Semiconductors

Near-Term Trends in Chip Technology

Looking at the immediate horizon, the semiconductor industry is poised to deliver even more specialized solutions tailored for AI data centers over the next few years. Advanced packaging techniques, such as 2.5D and 3D stacking, are set to enhance chip performance by allowing greater density and efficiency within constrained spaces. Smaller process nodes, shrinking down to 2nm and beyond, will further boost computational power while reducing energy demands, a critical factor as data centers scale. These developments promise to keep pace with AI’s relentless growth, ensuring infrastructure can handle increasingly sophisticated workloads.

Another promising trend is the adoption of optical interconnects like silicon photonics, which offer low-latency data transmission compared to traditional electrical connections. This technology could revolutionize how data moves within and between data centers, slashing delays and energy use in processes that underpin real-time AI applications. As these innovations roll out, they will likely redefine operational benchmarks for data centers, making them faster and more resilient. The focus on near-term advancements reflects a pragmatic approach to addressing current limitations while laying the groundwork for more transformative shifts in the years ahead.

Long-Term Visions and Emerging Paradigms

Peering further into the future, the semiconductor landscape holds visionary possibilities that could fundamentally alter AI data center capabilities beyond the next five years. Neuromorphic computing, inspired by the human brain’s structure, stands out as a potential game-changer, with initiatives like Intel’s Loihi chips aiming to drastically cut energy consumption while maintaining high performance. This approach could unlock new efficiencies, making AI systems viable for applications where power constraints are a limiting factor, such as in remote or mobile environments.

Equally compelling are concepts like “AI-native data centers,” designed from the ground up to optimize AI workloads rather than retrofitting existing infrastructure. Paired with cutting-edge materials like carbon nanotubes, which offer superior conductivity and strength compared to silicon, these ideas could redefine hardware paradigms. Such advancements might enable breakthroughs in diverse fields, from real-time healthcare diagnostics to fully autonomous systems in transportation. While these long-term visions are speculative, they highlight the boundless potential of semiconductor innovation to expand AI’s reach into uncharted territory.

Persistent Challenges to Overcome

Despite the optimism surrounding future developments, significant hurdles remain that could impede the semiconductor-driven AI boom if left unaddressed. Escalating power demands continue to loom large, as data centers consume ever-greater amounts of energy to support AI’s computational needs, challenging grid capacities worldwide. Manufacturing costs are also spiraling upward with each technological leap, as smaller process nodes and advanced materials require increasingly sophisticated production methods, straining budgets even for industry giants.

Compounding these issues is a persistent shortage of skilled labor capable of designing, producing, and maintaining the next generation of semiconductors, which threatens to slow innovation at a time when speed is critical to progress. Overcoming these obstacles will demand more than just technical solutions; it will require coordinated efforts across industries, educational institutions, and governments to build a workforce and infrastructure capable of sustaining growth. Addressing these challenges head-on is essential to ensure that the promise of AI and semiconductors is not undermined by practical limitations.

Balancing Innovation with Sustainability

Reflecting on the journey of semiconductors in powering the AI data center boom, it’s evident that past efforts focused intensely on pushing technological boundaries to meet soaring computational demands. Industry pioneers tackled immense challenges in chip design and architecture, ensuring that AI models could scale from niche experiments to global tools. Collaborative strides between chipmakers, foundries, and cloud providers cemented a foundation where innovation thrived, even amidst fierce competition and economic pressures. The environmental and geopolitical hurdles encountered were met with initial steps toward efficiency and supply chain diversification, setting a precedent for resilience.

Moving forward, the emphasis must shift toward harmonizing this relentless drive for advancement with sustainable practices, ensuring that progress does not come at the expense of environmental or geopolitical stability. Stakeholders should prioritize investment in energy-efficient technologies and renewable power sources for data centers to mitigate environmental impact. Simultaneously, fostering global partnerships to diversify supply chains can reduce vulnerabilities, ensuring stability in the face of uncertainties. By integrating these actionable strategies, the industry can sustain the momentum of the silicon supercycle, guaranteeing that AI’s transformative potential benefits societies worldwide without compromising planetary health or geopolitical balance.