In today’s rapidly changing landscape of software development, observability has emerged as a critical discipline for maintaining the reliability and performance of complex systems, ensuring that teams can effectively monitor and troubleshoot issues. Defined as the capacity to gain deep insights into a system’s behavior through telemetry data such as logs, metrics, and traces, observability plays an indispensable role in keeping applications running smoothly. As organizations increasingly adopt diverse architectural patterns to meet varying business needs, the approach to observability must be carefully tailored to the specific demands of each structure. This exploration delves into the fundamental differences between observability practices in monolithic and microservices architectures, shedding light on the unique challenges each presents. By examining the strategies and tools that best suit these contrasting setups, a clearer understanding emerges of how to ensure system health and efficiency. The discussion aims to provide actionable insights for DevOps and Site Reliability Engineering teams navigating these architectural paradigms.

Architectural Foundations and Observability Challenges

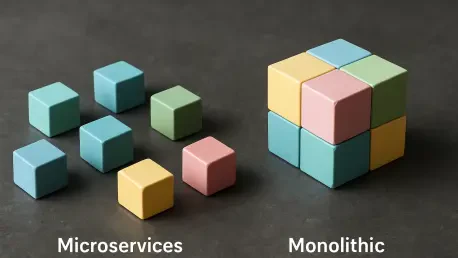

In monolithic architectures, the system operates as a single, cohesive unit where all components are tightly integrated within one environment. This structure simplifies observability to a significant degree, as monitoring and logging can be centralized with relative ease. Metrics often focus on server-level indicators such as CPU usage, memory consumption, and input/output operations. Tools for tracking performance are typically straightforward, with data aggregation happening in a unified context. However, as these systems scale and internal complexity grows, identifying the root cause of issues becomes more difficult. Without granular telemetry, pinpointing bottlenecks or failures within the intricate web of dependencies can turn into a cumbersome task, necessitating a deeper level of insight than initially anticipated.

Microservices, on the other hand, introduce a distributed environment where independent services communicate over networks, creating a far more complex observability landscape. Each service operates autonomously, often in containerized or serverless setups, generating a high volume of telemetry data that must be correlated for meaningful analysis. Distributed tracing becomes essential to track requests as they traverse multiple services, helping to uncover latency issues or errors that might otherwise remain hidden. The dynamic nature of these systems, with frequent deployments and ephemeral components, adds another layer of difficulty. Traditional monitoring approaches fall short, as they struggle to adapt to the constant changes and the sheer scale of data, requiring advanced strategies to maintain visibility across the entire architecture.

Tailored Strategies for Effective Monitoring

When it comes to monolithic systems, observability strategies often revolve around centralized mechanisms that leverage the architecture’s unified nature. Instrumentation is typically embedded directly within the application using SDKs or libraries, allowing for seamless data collection from a singular source. Logging aggregates information in one place, while metrics focus on host or process-level details, providing a clear view of overall system health. Alerting mechanisms are generally tied to server errors or application logs, ensuring that anomalies are quickly flagged. Traditional Application Performance Management (APM) tools prove sufficient in these static environments, where data volumes remain manageable and changes occur less frequently, enabling teams to maintain control with relatively simple setups.

Conversely, microservices demand a more sophisticated approach to observability due to their distributed and dynamic characteristics. Standardized instrumentation across services, often facilitated by frameworks like OpenTelemetry, ensures consistency in data collection despite diverse environments. Distributed tracing with context propagation is crucial for following requests through multiple services, while centralized log aggregation with service-specific tagging aids in isolating issues. Alerting systems integrate traces and metrics to offer contextual insights, reducing the risk of missing critical problems. Modern observability platforms are necessary to handle the high volume of data generated in these frequently shifting setups, providing the scalability and flexibility required to keep pace with constant updates and deployments.

Best Practices for Robust Observability

Adopting best practices is vital for achieving effective observability, regardless of the architectural model, though microservices often necessitate more advanced solutions due to their inherent complexity. Embracing vendor-neutral frameworks like OpenTelemetry fosters interoperability and consistency across diverse tools and services, simplifying integration efforts. For microservices, prioritizing distributed tracing ensures end-to-end visibility, enabling teams to track interactions across asynchronous calls and varied programming languages. Centralizing telemetry data into unified platforms further enhances troubleshooting capabilities, offering a comprehensive view of system behavior that can quickly reveal underlying issues before they escalate into major disruptions.

Another critical aspect of robust observability lies in leveraging automation and proactive monitoring to manage the growing complexity of telemetry data, particularly in microservices environments. Incorporating AI-driven anomaly detection and root cause analysis reduces manual overhead, allowing for faster identification of irregularities. Shifting observability practices left—integrating them early in the development lifecycle—helps catch potential problems before they reach production, minimizing risks. Contextual alerting, which moves beyond static thresholds to deliver meaningful notifications, cuts down on alert fatigue and sharpens response times. Additionally, dynamic infrastructure monitoring through service discovery and tagging addresses the transient nature of modern systems, ensuring adaptability to constant changes without losing sight of critical performance indicators.

Navigating Future Observability Needs

Reflecting on the strategies that shaped observability practices, it becomes evident that tailored approaches are indispensable for addressing the distinct challenges of monolithic and microservices architectures. Monoliths benefit from centralized monitoring that capitalizes on their unified structure, while microservices require intricate distributed tracing and scalable data management to handle their complexity. Tools and frameworks have evolved to meet these demands, offering solutions that balance simplicity with sophistication as needed.

Looking ahead, teams should focus on continuous investment in modern observability platforms that support dynamic environments and high data volumes. Integrating automation and AI-driven insights will further enhance proactive monitoring, helping to anticipate issues before they impact users. Emphasizing a shift-left mentality ensures that observability remains a core consideration from the earliest stages of development. By staying adaptable and aligning strategies with architectural needs, organizations can build resilient systems that maintain performance and reliability in an ever-evolving technological landscape.