The rapid evolution of artificial intelligence models has unlocked unprecedented reasoning capabilities, yet their practical application remains constrained by a fundamental barrier: the messy, complex, and siloed world of real-world operational data. For an AI to transition from a sophisticated chatbot into a truly effective “agent” capable of solving problems, it must be able to securely and reliably interact with the databases, applications, and infrastructure that power a business. Responding to this critical challenge, Google Cloud has unveiled a significant expansion of its managed services for the open-source Model Context Protocol (MCP), a strategic move that aims to create a standardized, universal interface for AI. This initiative introduces managed MCP servers across its flagship database services, effectively building a secure bridge that allows models like Gemini to not just access data, but to actively participate in and automate complex business and development workflows.

A Unified Bridge for AI and Data

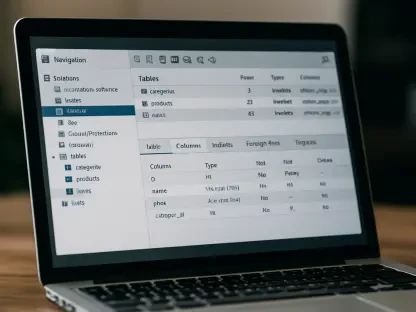

The central ambition behind this expansion is the radical simplification of AI-to-data connectivity, a process that has historically required bespoke integrations, complex middleware, and significant developer overhead. By introducing managed MCP servers, Google Cloud is offering a completely serverless approach that abstracts away the underlying infrastructure. This means developers no longer need to deploy, configure, or maintain intermediary services to connect their agents to data sources. Instead, they can establish a secure connection by simply configuring an MCP server endpoint within their agent’s settings, providing immediate and direct access to operational data. This streamlined process is designed to accelerate the development of agentic applications, allowing teams to focus on building intelligent workflows rather than managing the plumbing of data access. The serverless model ensures that these connections can scale seamlessly with demand, handling agentic workloads without incurring additional operational burdens.

This initiative also places a heavy emphasis on creating a secure and observable environment for AI interactions, which is crucial for enterprise adoption. The managed MCP servers are built with enterprise-grade security as a foundational principle, ensuring that AI agents operate within a trusted and governable framework. Every action and query executed by an agent through an MCP server is meticulously logged in Cloud Audit Logs, providing a complete and immutable audit trail for security and compliance teams. This full observability ensures that organizations have clear visibility into how their AI agents are interacting with sensitive data, allowing them to monitor for anomalous behavior and maintain accountability. Furthermore, the system is designed to provide robust performance monitoring, giving administrators the tools they need to diagnose issues, optimize interactions, and ensure that their agentic applications are running efficiently and reliably, solidifying the platform as a viable solution for mission-critical operations.

Empowering Agents Across Diverse Databases

The power of this new interface is demonstrated through its deep integration across a diverse portfolio of Google Cloud’s specialized databases, enabling AI agents to perform a wide range of sophisticated, context-aware tasks. For instance, with the AlloyDB for PostgreSQL MCP server, an agent can go far beyond simple data retrieval. It can be tasked with advanced database administration functions, such as creating new database schemas based on high-level requirements, diagnosing the root causes of slow-running queries, and even executing complex vector similarity searches directly within the database to power intelligent search and recommendation features. In the case of Spanner, agents can leverage its unique multi-model capabilities, including its integrated graph database. This allows an agent to model and query deeply interconnected data using standard SQL and Graph Query Language (GQL), making it possible to rapidly uncover complex relationships, such as identifying sophisticated fraud rings or generating highly personalized product recommendations based on a user’s entire interaction history.

This empowerment extends across the entire database fleet, providing a consistent interaction model for different workloads. The Cloud SQL MCP server offers a unified interface for its PostgreSQL, MySQL, and SQL Server engines, allowing developers and database administrators to use natural language to manage their entire database fleet. An agent could be asked to optimize query performance, assist in application development by generating SQL code, or troubleshoot database issues by analyzing logs and metrics. For high-performance NoSQL workloads, Bigtable’s MCP integration simplifies the automation of operational workflows common in customer support, logistics, and IT operations, where agents can interact with high-throughput time series data. Similarly, the Firestore MCP server, focused on mobile and web applications, allows an agent to sync with live document collections. This enables dynamic, real-time interactions, such as an agent checking a user’s current session state or verifying an order status directly from the database in response to a simple natural language prompt from a user.

Beyond DatAI for Development and Infrastructure

Google’s vision for an agent-centric cloud extends beyond mere data interaction and into the very process of software development and infrastructure management. A key innovation in this announcement is the new Developer Knowledge MCP server, a unique service that provides an API connecting Integrated Development Environments (IDEs) directly to Google’s comprehensive and up-to-date official documentation. This effectively transforms an AI agent into an expert co-pilot for developers. The agent can provide contextually relevant answers to technical questions, assist in troubleshooting code by cross-referencing it with best practices and known issues, and guide developers through complex architectural decisions in real time. This integration promises to significantly reduce the friction in the development lifecycle, allowing developers to stay focused within their IDE while receiving expert guidance on demand, ultimately boosting productivity and improving code quality.

The practical power of combining these new capabilities was highlighted in a demonstration where an AI agent fully automated the complex task of migrating an application’s backend from a local database to a managed Cloud SQL instance. In this scenario, the agent seamlessly orchestrated the entire process by interacting with multiple MCP servers. It utilized the Cloud SQL MCP server to provision the new database instance, configure its settings, and manage the data migration process. Simultaneously, the agent connected to the Developer Knowledge MCP server to retrieve real-time architectural guidance. This allowed it to make informed decisions based on Google’s official documentation, ensuring the migration followed best practices for performance, security, and reliability. This example showcases a future where AI agents can handle not just discrete tasks but also complex, multi-step projects that require both infrastructure interaction and deep technical knowledge, fundamentally changing how cloud environments are managed.

Security and Open Standards as Cornerstones

Foundational to the entire offering is an unwavering commitment to robust security and the fostering of a healthy, open ecosystem. The managed MCP servers are engineered upon Google Cloud’s standard security frameworks, prioritizing an identity-first approach to access control. Authentication is handled exclusively through Identity and Access Management (IAM), completely eliminating the need for shared secret keys or passwords. This ensures that an agent’s permissions are granularly controlled and tied to a specific identity, allowing administrators to grant it access only to the specific tables, views, or functions it is explicitly authorized to use. This principle of least privilege is critical for preventing unauthorized data access and ensuring that AI agents operate within strictly defined boundaries, providing enterprises with the confidence to deploy them in production environments that handle sensitive information.

Moreover, Google has made a deliberate choice to build this ecosystem on the open-source Model Context Protocol standard, a decision that underscores a commitment to interoperability and avoids vendor lock-in. Because the managed servers are fully compliant with the open MCP standard, they are not restricted to use with Google’s proprietary AI models like Gemini. The platform is explicitly designed to be model-agnostic, meaning that third-party AI agents, such as Anthropic’s Claude or other leading models, can be easily connected to the Google Cloud ecosystem. This is accomplished by simply configuring a Custom Connector to point to the appropriate Google Cloud database MCP endpoint. This open approach not only provides customers with greater flexibility and choice but also encourages broader industry adoption of the standard, paving the way for a more interconnected and competitive landscape for AI development.

The Roadmap to an Agent-Centric Cloud

The comprehensive expansion of managed MCP services across Google Cloud’s data and developer platforms was a clear declaration of a long-term strategy. These announcements indicated that the company was aggressively working to elevate AI agents from niche tools to first-class citizens within the cloud development and operational landscape. The cohesive and standardized approach to AI interaction that was presented aimed to create a foundational layer for a new generation of intelligent applications capable of reasoning, planning, and acting upon the real world. This commitment was not framed as a single product launch but as a sustained, strategic investment in building a future where AI is seamlessly and securely woven into the fabric of every aspect of the cloud. The vision articulated was one where agent-driven automation would become the standard for managing complexity and unlocking new efficiencies.

To underscore this long-term commitment, the company also provided a glimpse into its future roadmap, which involved extending managed MCP support to an even broader array of critical cloud services. The planned expansions included integration with business intelligence platforms like Looker, specialized tools such as the Database Migration Service (DMS) and BigQuery Migration Service, and core infrastructure components like Memorystore for in-memory caching and Pub/Sub for asynchronous messaging. This forward-looking plan signaled an intent to make nearly every component of the Google Cloud ecosystem accessible and controllable through this universal, agent-centric interface. This strategic direction suggested a future where developers could build highly autonomous systems that could manage data analytics, execute complex migrations, and orchestrate real-time data flows, all through a unified and intelligent control plane.