The foundational technology supporting big data operations for thousands of global enterprises has been found to harbor a significant security flaw, placing critical systems at risk of sudden crashes and irreversible data corruption. A newly discovered vulnerability within Apache Hadoop, the ubiquitous open-source framework for distributed storage and processing, threatens the stability of production environments that rely on its robust architecture. The issue, identified as CVE-2025-27821 and reported by security researcher BUI Ngoc Tan, stems from an out-of-bounds write condition in the HDFS native client. While Apache has classified the vulnerability’s severity as moderate, its potential impact on mission-critical data pipelines and cluster management configurations is substantial. The flaw allows untrusted input to compromise system memory, leading to a cascade of potential failures that could bring essential data operations to a halt, underscoring the urgent need for organizations to assess their exposure and implement immediate remediation measures.

1. A Deeper Look at the HDFS Native Client Flaw

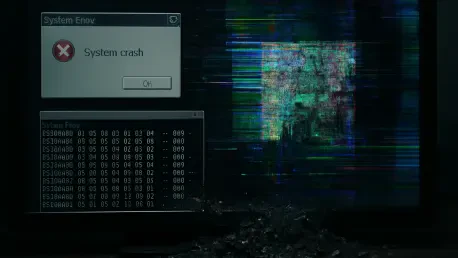

The core of this security issue resides within the URI parser component of Apache Hadoop’s HDFS native client, a fundamental tool used for interacting with the Hadoop Distributed File System. This out-of-bounds write vulnerability allows a malicious actor to supply specially crafted input that writes data outside of the memory buffer allocated for it. This action can overwrite adjacent memory, leading to memory corruption that destabilizes the entire system. The potential consequences of successful exploitation are severe, ranging from denial-of-service conditions that render Hadoop clusters completely unavailable to unpredictable system behavior and, most critically, the corruption or outright loss of stored data. Because the HDFS native client is a commonly deployed component integrated deeply into data processing pipelines and cluster management tools, the attack surface is particularly wide. For organizations storing sensitive or regulated data on HDFS clusters, this vulnerability presents a heightened risk, as an exploited system could compromise data integrity and availability in production environments.

2. Essential Steps for Securing Hadoop Deployments

In response to this critical vulnerability, organizations were strongly advised to take immediate and decisive action to safeguard their big data infrastructures. The primary directive issued by Apache was the urgent prioritization of upgrading all affected systems to version 3.4.2 or later, as these releases contain the necessary patches to remediate the flaw. To facilitate this, system administrators were urged to conduct a comprehensive audit of all Hadoop deployments across their networks to identify every instance running a vulnerable version. Beyond patching, a series of proactive security measures were recommended. These included the careful monitoring of HDFS logs for any suspicious or malformed URI patterns, which could signal an attempt to exploit the vulnerability. Additionally, implementing stringent network-level access controls was advised to restrict HDFS client connections exclusively to trusted and verified sources, thereby minimizing the risk of unauthorized access. The incident also served as a catalyst for many organizations to review and reinforce their existing patch management procedures, ensuring that future high-impact vulnerabilities would be addressed with greater speed and efficiency.