A significant portion of the internet went dark for users across the country this morning, as a widespread outage at Amazon Web Services (AWS) triggered a cascade of failures affecting everything from popular streaming services to critical enterprise applications. The disruption, which began in the early hours of February 18, served as a stark reminder of the digital economy’s profound reliance on a handful of major cloud infrastructure providers. As engineering teams scrambled to diagnose and resolve the underlying issues, businesses and consumers alike were left grappling with service interruptions, highlighting the vulnerabilities inherent in a highly interconnected cloud ecosystem. The event underscored the fragility of modern digital services, where a single point of failure within a core provider can have far-reaching consequences that ripple across numerous industries, disrupting productivity, commerce, and daily communications for millions. The outage’s scope quickly expanded, demonstrating how seemingly isolated technical faults can propagate through dependent systems to create a nationwide service blackout.

1. Unpacking the Timeline of Disruption

The first signs of trouble emerged shortly after 6:00 a.m. ET, with monitoring systems and user reports flagging a sharp increase in timeouts and error rates for applications leveraging core cloud services. Initially, the issues appeared localized to object storage and virtual server instances, causing intermittent failures for websites and mobile apps trying to load assets or execute backend logic. However, the problem’s true depth became apparent as the hours progressed. By 8:30 a.m. ET, the incident had escalated dramatically, with networking and database access problems manifesting across multiple availability zones. This escalation produced a domino effect, taking down a host of dependent services, including content delivery networks, user authentication systems, and automated software deployment pipelines. The outage demonstrated a classic cascading failure, where the impairment of foundational services led to the subsequent collapse of higher-level applications that depend on them for basic functionality, paralyzing a wide swath of digital operations.

The impact was felt almost immediately by both consumer-facing platforms and internal enterprise systems, leaving no corner of the digital landscape untouched. High-traffic streaming services, which rely on cloud infrastructure for content delivery and user authentication, experienced significant buffering issues and outright login failures, frustrating viewers during peak morning hours. Simultaneously, enterprise workloads suffered from delayed background processing jobs and a surge in failed database transactions, disrupting critical business operations. The outage was indiscriminate, affecting both legacy applications migrated to the cloud and modern, cloud-native deployments, underscoring the universal dependence on core infrastructure primitives. For development teams, the timing was particularly disruptive, as continuous integration and deployment (CI/CD) pipelines ground to a halt, preventing them from shipping updates or rolling back problematic changes, effectively freezing software development cycles until services were restored.

2. The Economic and Operational Fallout

The economic repercussions of the outage were both immediate and substantial, illustrating how a single provider’s interruption can disrupt digital supply chains and halt commerce. E-commerce platforms reported a significant drop in successful checkouts as payment gateways and inventory management systems failed, leading to lost sales during a key business period. In the highly competitive digital advertising market, automated bidding systems recorded missed opportunities, resulting in lost revenue for both advertisers and publishers. Furthermore, analytics pipelines, which are vital for business intelligence and decision-making, developed significant gaps in data ingestion, compromising the integrity of key performance metrics. These direct financial losses were compounded by the operational costs of mobilizing engineering teams for emergency response and the intangible damage to brand reputation as customer-facing services became unreliable. The event served as a clear financial stress test for businesses heavily invested in a single cloud provider’s ecosystem.

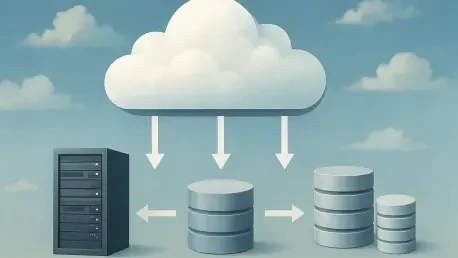

In the aftermath, the incident prompted a widespread re-evaluation of infrastructure resilience and dependency management across the technology industry. Engineering leaders convened to dissect the failure and update their contingency plans, with a renewed focus on building more robust systems. The outage became a powerful case study for advocating investment in multi-region redundancy, a strategy that involves distributing workloads across geographically separate data centers to insulate services from localized failures. More advanced discussions centered on the feasibility and cost of multi-cloud failover architectures, which would allow critical applications to shift to an entirely different cloud provider during a major disruption. The event also highlighted the critical importance of comprehensive observability and well-rehearsed incident playbooks, ensuring that teams can detect, diagnose, and mitigate issues more rapidly in the future. The disruption was not just a technical failure but a catalyst for strategic conversations about the architectural choices that underpin modern digital services, pushing organizations to prioritize resilience over pure cost optimization.