Capex isn’t the bottleneck – throughput, data access, and architecture are. Here’s how to redesign your cloud roadmap around the realities of AI operations.

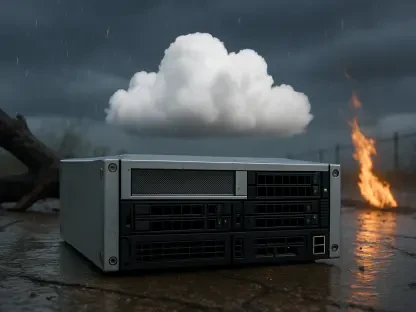

For two years, board decks and banknotes have treated AI like a steel mill: pour in capital, wait for capacity, harvest productivity. The storyline is tidy – hyperscalers spend billions on graphics processing units and data centers, enterprises follow with “AI budgets,” and benefits arrive on schedule. That factory metaphor collapses the moment your models meet your workflows. AI value isn’t a linear function of capital; it’s a system outcome shaped by data access, change management, and cloud architecture.

This piece highlights where the capex-first narrative breaks down and offers an alternative approach. By naming the true bottlenecks, you’ll see how these constraints create “AI debt.” You’ll also receive a practical roadmap to set you up for success in this era.

Why the Capex-First Narrative Sticks

Big numbers feel like progress. Amortization schedules fit neatly in spreadsheets. Dashboards render tidy capacity lines. Inside cloud teams, the truth is messier. Model performance is path-dependent on domain context, and infrastructure spend is path-dependent on workload shape. Pay for the wrong shape – too much fixed graphics processing unit, too little elastic memory bandwidth, no plan for data gravity – and you’ll optimize the wrong constraint. You can burn millions and still be blocked by a schema change or an access review.

The narrative also collapses AI into a single S-curve. Enterprises don’t buy “AI” generically; they stand up dozens of narrow systems with different cost profiles. A retrieval-augmented agent for underwriting lives on latency and knowledge freshness. A nightly forecasting pipeline is concerned with retraining windows and batch costs. Lumping them together hides a practical truth: cloud architecture – not raw capital expenditure – determines the curve you actually achieve.

What This Means for Your Cloud Roadmap

1) Treat capacity as portfolios, not pools. If your plan says “X amount of graphics processing units for AI,” you’re already behind. Map workloads to archetypes – interactive inference, scheduled training, vector search, fine-tune bursts, feature engineering, and evaluation – and give each a first-class lane. Architect per-archetype service level objectives, scaling policies, and placement strategies across regions and vendors. Your goal isn’t maximum utilization; it’s minimum lead time from idea to safe, cost-efficient production.

2) Move data work left. Most AI delays live in the seams between app teams and data owners. Adopt product thinking for datasets, embeddings, and features. Make schemas, freshness, and lineage auditable and changeable without heroics. If governance requires a ticket for every definition tweak, your AI roadmap is a queue-management problem disguised as a graphics processing unit problem. Invest in semantic layers and contracts that travel with the data, so model work isn’t re-litigating definitions.

3) Instrument unit economics, not just clusters. Cloud bills reveal where money goes; they rarely indicate where value originates. Measure per-use-case units: cost per qualified lead scored, cost per document summarized within a service license agreement, cost per fraud alert at a target precision. Tie budgets to these units and route them through platform teams, so spending reflects product reality, not only cluster graphs.

4) Embrace multi-shape, multi-venue by default. AI rewards heterogeneity. The optimal answer might be some on the graphics processing unit, some on an inference application-specific integrated circuit, some in your visual predictive checks, some at a provider, and some at the edge. Lock-in isn’t just commercial; it’s architectural. Stabilize the layers that must persist – data contracts, service interfaces, and security posture – and keep swap-ability higher up, such as model endpoints, vector indexes, and schedulers.

5) Fund outcomes, not capacity. Commit dollars to golden units and customer-facing milestones, then let teams select models and infrastructure that best hit those outcomes. When budget line items shift from “instances” to “results,” waste becomes obvious – and so do smarter placement decisions, such as moving latency-tolerant jobs to more cost-effective venues or adopting preemptible capacity for bursty training.

A Pragmatic Path Forward (Six Moves)

One: Run a bottleneck audit. Track queue time from idea to deployment across data prep, security review, model selection, evaluation, and release. Publish the current lead time and aim for a 50% reduction before purchasing another rack.

Two: Carve out archetype lanes. For interactive inference, prioritize low-latency networking, autoscaling, and aggressive caching. For training bursts, design preemptible strategies and warm starts. For vector search, choose storage that respects data gravity and query locality.

Three: Productize knowledge. Build retrieval-augmented generation like a product: source control for prompts and retrieval graphs, dataset versioning, freshness service license objectives, and red-teaming as a release gate. The model isn’t the product; the retrieval path is.

Four: Federate governance without centralizing change. Create a cross-functional council that ships policies as code, encompassing masking, retention, lineage, and evaluation standards. Give domains the right to move, within guardrails, without waiting on a central queue.

Five: Declare the golden units. For every AI feature in production, agree on one business-aligned cost and one quality metric. Make them visible. Let teams trade architecture and model choices against those units, not against vague notions of “efficiency.”

Six: Build a buy/borrow/build playbook. For each use case, set defaults: buy for commodity tasks, borrow via API where speed beats customization, build only where proprietary context compounds. Align compute commitments to that mix – not to a headline graphics processing unit number.

The Uncomfortable Implication

If your 2025 plan centers on “AI capex,” you’re planning around the wrong scarce resource. The scarcest resource in enterprise AI is change throughput: How quickly your cloud, data, and security apparatus can absorb new information and ship a safer, cheaper version of what you already run. Capex can help, but it rarely improves throughput.

The Optimistic Takeaway

This is solvable. Cloud teams that reframe AI as a portfolio operations challenge, rather than a capital expenditure race, are already shipping faster and spending less. They bias for modularity, treat data as a product, federate decisions with guardrails, and fund outcomes at the unit level. They also talk like operators, not tourists: they know where time goes, and they design roadmaps to reclaim it.

Before approving the next major expenditure, conduct a two-week experiment. Pick one use case, define the golden unit, and measure end-to-end lead time. Optimize only what moves those two numbers. If the cheapest path is fewer graphics processing units and more changes to schemas, cache layers, or retrieval graphs, take it.